Digital Twins for Autonomous Systems in Contested Environments: A Research Imperative

The future of robotics demands a fundamental rethinking of how autonomous systems operate in challenging, real-world environments. Traditional approaches that treat individual systems in isolation are proving inadequate for the complex scenarios that define modern robotics applications. From underwater vehicles navigating murky waters while avoiding obstacles to aerial drones conducting surveillance in GPS-jammed environments, today's autonomous systems must operate in contested spaces where multiple agents interact, compete, and coordinate simultaneously.

This challenge has highlighted critical limitations in existing AI methodologies. A recent editorial on neurosymbolic AI identifies a paradigmatic shift towards hybrid approaches that combine symbolic reasoning with data-driven methods [1]. This shift emerges from the recognition that neither purely symbolic nor purely data-driven approaches can adequately address the complexity of contested multi-agent environments. Symbolic AI methods excel at logical reasoning but struggle with quantitative uncertainty in dynamic multi-agent scenarios. Conversely, data-driven methods demonstrate impressive pattern recognition capabilities yet lack the expressiveness needed for complex cognitive reasoning about mission objectives and safety constraints when multiple agents interact [1]. These complementary limitations create a clear gap in current capabilities—one that becomes critical when autonomous systems must make split-second decisions in environments where their actions affect and are affected by other intelligent agents.

The solution lies in fundamentally reimagining digital twin frameworks. Rather than serving as mere real-time digital replicas of individual systems, next-generation digital twins must evolve into comprehensive simulation environments that model multiple interacting agents. This transformation requires integrating multi-agent reinforcement learning (MARL) principles, which focus on studying the behavior of multiple learning agents coexisting in shared environments where each agent pursues its own rewards while influencing others [2]. This multi-agent perspective addresses a crucial reality of contested environments: most real-world scenarios involve elements of both cooperation and competition [2]. Consider multiple autonomous vehicles planning paths—each seeks to minimize journey time while sharing the collective interest of avoiding collisions [3]. This creates complex social dilemmas where agents must balance individual objectives against collective safety requirements [4], a challenge that traditional single-agent approaches cannot adequately address.

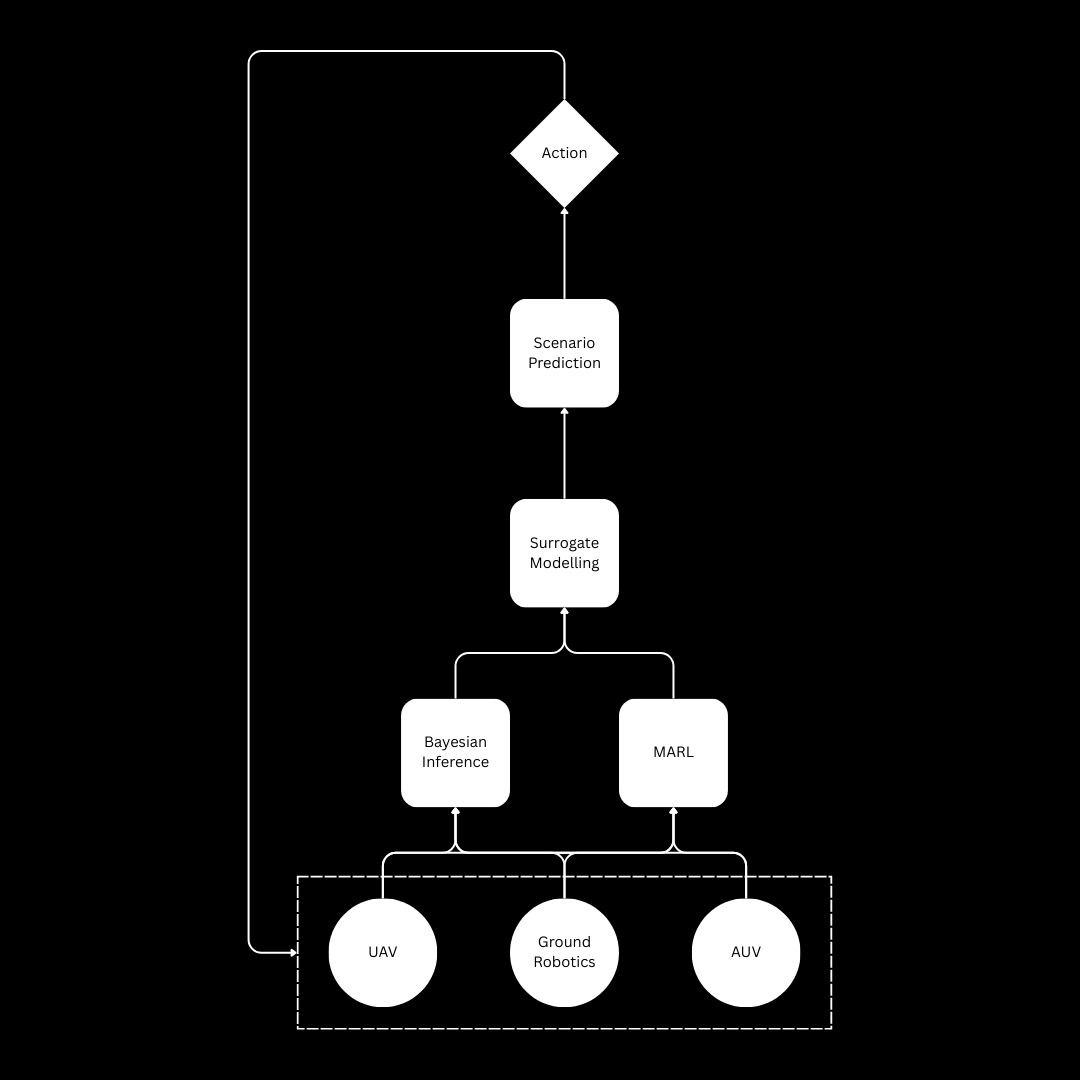

Figure 1: Integrated digital twin architecture for autonomous systems in contested environments, combining Bayesian inference for uncertainty quantification with multi-agent reinforcement learning (MARL) to enable real-time scenario prediction and surrogate modeling across UAV, ground robotics, and AUV domains.

Advanced digital twin architectures built on these principles would actively predict multi-agent interactions and emergent behaviors. Machine learning-based surrogate models would approximate complex system dynamics and execute thousands of multi-agent scenarios in real-time. When aerial drones encounter unexpected weather while operating near other aircraft, such systems could instantly simulate multiple coordination strategies, evaluate success probability considering other agents' probable responses, and recommend optimal actions while physical drones continue their missions. The integration of Bayesian networks for uncertainty quantification within mixed-sum game environments presents particular research potential. This combination would enable systems to reason probabilistically about uncertain outcomes while maintaining structured knowledge representation about mission contexts and constraints. The result would be autonomous systems capable of understanding, predicting, and adapting to complex contested environments through continuous interaction and learning.

Real-world contested environments rarely resemble simple game-theoretic scenarios with clear cooperation-defection choices. Instead, they involve sequential social dilemmas where agents take multiple actions over time with nuanced distinctions between cooperative and competitive behavior [4]. Unlike simple matrix games, these scenarios require systems capable of learning through autocurricula—environments where improving agent performance changes the conditions for all agents, creating feedback loops that result in distinct learning phases with significant practical implications [6]. Autocurricula represent a particularly salient concept in multi-agent reinforcement learning, where agent improvement continuously reshapes the environment for themselves and others [6]. This dynamic creates emergent phenomena especially apparent in adversarial settings, where groups of agents race to counter opposing strategies. Such emergent behaviors are precisely what autonomous systems in contested environments must understand and adapt to in real-time.

The inherent difficulties in multi-agent deep reinforcement learning, where environments become non-stationary and the Markov property is violated as transitions and rewards no longer depend solely on an agent's current state [9], present both challenges and opportunities for digital twin architectures. These fundamental limitations require hybrid neurosymbolic approaches that can reason about uncertainty while maintaining the ability to explain decisions and adapt to unexpected circumstances. Modular architectures capable of handling diverse scenarios would demonstrate considerable research merit across domains: aerial drones navigating contested airspace with other aircraft [7], underwater vehicles exploring regions with competing research vessels, or ground-based robots operating in disaster zones alongside human teams [8]. Each domain presents unique mixed-sum scenarios requiring the balance of individual mission success against collective safety and efficiency.

The convergence of neurosymbolic AI, digital twin technology, and multi-agent reinforcement learning presents an unprecedented opportunity for advancing autonomous systems capability in contested environments. By combining the probabilistic reasoning of Bayesian networks with structured knowledge representation of digital twins and multi-agent learning capabilities, researchers can develop systems that reason simultaneously about observations, mission context, and multi-agent dynamics. As autonomous systems increasingly operate in contested multi-agent environments, research into quantifying and reasoning about uncertainty while learning from interaction becomes essential. The operational stakes are considerable for systems that cannot explain decisions, adapt when facing unexpected circumstances, or coordinate effectively with other agents. The development of foundational frameworks enabling truly intelligent autonomous systems represents a critical research frontier—systems that operate safely and effectively by understanding and embracing uncertainty and multi-agent complexity as fundamental aspects of intelligent decision-making. This research directly addresses the call for hybrid AI frameworks identified in recent neurosymbolic AI research [1], offering practical solutions for increasingly complex operational requirements while addressing fundamental limitations in current approaches. The future of autonomous systems depends on our ability to develop these integrated frameworks that can handle the contested, uncertain, and multi-agent nature of real-world environments.

References

[1] Meli, D., Sridharan, M., Perri, S. and Katzouris, N. (2025). Editorial: Merging symbolic and data-driven AI for robot autonomy. Frontiers in Robotics and AI, 12:1662674.

[2] Albrecht, S.V., Christianos, F. and Schäfer, L. (2024). Multi-Agent Reinforcement Learning: Foundations and Modern Approaches. MIT Press.

[3] Shalev-Shwartz, S., Shammah, S. and Shashua, A. (2016). Safe, Multi-Agent, Reinforcement Learning for Autonomous Driving. arXiv:1610.03295.

[4] Leibo, J.Z., Zambaldi, V., Lanctot, M., Marecki, J. and Graepel, T. (2017). Multi-agent Reinforcement Learning in Sequential Social Dilemmas. AAMAS 2017. arXiv:1702.03037.

[6] Leibo, J.Z., Hughes, E., et al. (2019). Autocurricula and the Emergence of Innovation from Social Interaction: A Manifesto for Multi-Agent Intelligence Research. arXiv:1903.00742v2.

[7] Ding, Y., Yang, Z., Pham, Q.-V., Zhang, Z. and Shikh-Bahaei, M. (2023). Distributed Machine Learning for UAV Swarms: Computing, Sensing, and Semantics. arXiv:2301.00912.

[8] Bettini, M., Kortvelesy, R., Blumenkamp, J. and Prorok, A. (2022). VMAS: A Vectorized Multi-Agent Simulator for Collective Robot Learning. The 16th International Symposium on Distributed Autonomous Robotic Systems. Springer. arXiv:2207.03530.

[9] Hernandez-Leal, P., Kartal, B. and Taylor, M.E. (2019). A survey and critique of multiagent deep reinforcement learning. Autonomous Agents and Multi-Agent Systems, 33(6), 750-797.