Mind The Trap: Verification Principles in LLMs for Automated Scientific Discovery

This exploration examines the potential parallels between verification principles central to Logical Positivism and contemporary approaches to ensuring reliability in LLM outputs for scientific discovery. While verification is essential for scientific integrity, an excessive focus on verifiability may inadvertently constrain LLMs' potential for generating novel scientific hypotheses and explanations, potentially replicating the limitations that historically affected the Logical Positivist movement.

1. Historical Context: Lessons from Logical Positivism

In the mid-20th century, Logical Positivism, with A.J. Ayer as one of its key proponents in the English-speaking world, positioned the verification principle as central to philosophical inquiry [1]. This principle, grounded in empiricism and advocated by the Vienna Circle, posited that for a statement to be meaningful it must be either an analytic proposition or be empirically verifiable [2]. Mathematics and logic are thus reduced to semantic conventions, with the verification principle delineating the boundary between science and metaphysics.

This was the underlying motivation of their program: to separate what we can know (science) from what we cannot. These ideas were formulated in Wittgenstein's Tractus of 1921. Although the influence of Logical Positivism eventually waned [3], its emphasis on verification presents intriguing parallels to contemporary challenges in artificial intelligence, particularly as LLMs become increasingly sophisticated and pervasive in scientific contexts [4].

While the pursuit of verifiability is crucial for ensuring reliability [5], an excessive focus on verification in LLMs for scientific discovery risks replicating the limitations that Logical Positivism imposed on philosophical and scientific thinking, potentially constraining LLMs' ability to contribute to creative and groundbreaking scientific ideas.

2. The Four Verification Traps in LLMs

In exploring these concerns, we conjecture that we may witness a resurgence of verification principles in the domain of LLMs, potentially mirroring the conundrums faced by the Logical Positivism movement. Consequently, we identify four key potential challenges for LLMs from the perspective of verifiability, which are, to some degree, inspired by the historical discourse on Logical Positivism:

2.1 The Verifiability Trap

Constraining LLMs to produce outputs verifiable through first-order logic against existing knowledge bases, implementing strict fact-checking mechanisms based on formal logical systems. This approach may prevent LLMs from generating novel hypotheses that cannot be immediately verified against current knowledge, thereby limiting their potential for breakthrough discoveries.

2.2 The Empirical Testability Trap

Limiting LLM outputs to empirically testable hypotheses and predictions, directly linked to observable phenomena and measurable through controlled experiments. While ensuring practical applicability, this constraint may eliminate theoretical insights or abstract reasoning that could lead to paradigm shifts in scientific understanding.

2.3 The Public Testing Trap

Requiring LLMs to rely exclusively on the vast open-source code and public data for verification, enabling community-wide scrutiny. Although promoting transparency and reproducibility, this approach may limit access to cutting-edge research or proprietary insights that could enhance scientific understanding.

2.4 The Fallibilism Trap

Driving LLMs into a constant state of uncertainty and revision, frantically updating their knowledge bases in real-time for fear of becoming outdated or incorrect. This hypercorrective behavior may prevent LLMs from making confident scientific claims or pursuing consistent lines of reasoning necessary for sustained scientific inquiry.

3. Conceptual Framework of Verification Traps

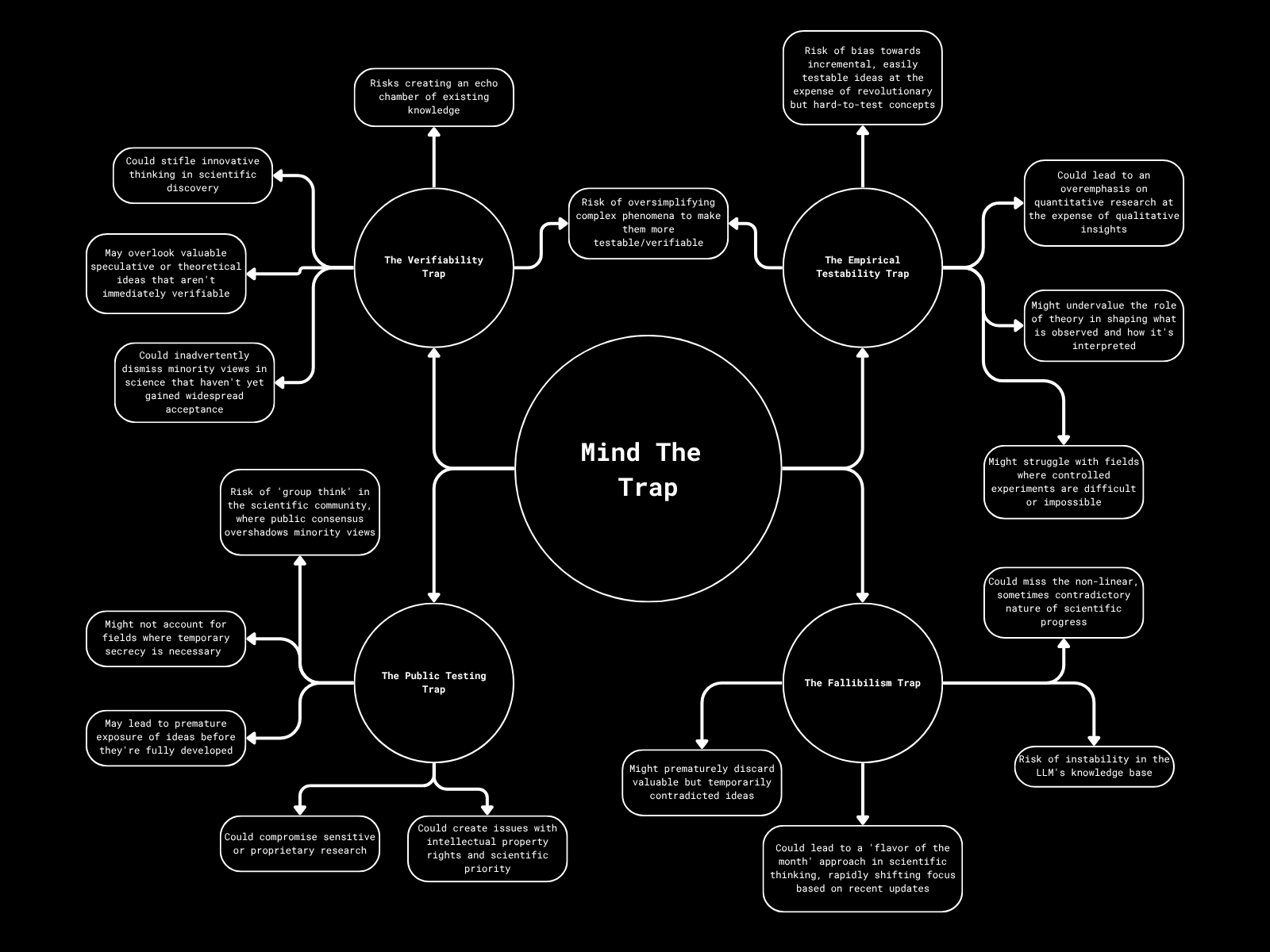

Figure 1: Conceptual framework of potential traps in LLM verification for scientific discovery. This diagram illustrates four primary challenges: The Verifiability Trap, The Empirical Testability Trap, The Public Testing Trap, and The Fallibilism Trap. Each trap is represented by a blue circle, with associated risks and limitations depicted via black nodes. The central "Mind The Trap" node emphasises the interconnected nature of these challenges. This framework highlights the potential pitfalls in applying stringent verification principles to LLMs in scientific contexts, drawing parallels with historical issues faced by Logical Positivism.

Figure 1 delineates potential traps in the verification of LLMs, drawing on historical lessons from Logical Positivism. It serves as a cautionary guide, prompting consideration of the complexities involved in defining and verifying knowledge in the context of LLMs and scientific discovery.

4. An Alternative Approach: Embracing Multiple Explanations

A complementary view, contrasting with a narrow focus on verification as a single criterion, would be akin to Epicurus' principle of multiple explanations. This idea, which can be traced back to Lucretius, is that several alternative explanatory hypotheses may be equally consistent with the observations.

Here, we suggest that using LLMs as generators of putative explanations consistent with the observations may be more beneficial than forcing the LLMs to verify each. Instead, using LLMs as a creative engine provides investigators with several putative routes (explanations) for understanding the observations. Hallucinations may turn out to be a mechanism to generate a multitude of viable explanations consistent with the observational data.

This approach fundamentally reframes the traditional view of LLM limitations as potential strengths, transforming apparent weaknesses into tools for scientific exploration and hypothesis generation.

5. Research Proposal: A Systematic Investigation

The following section outlines a comprehensive research proposal to systematically investigate these verification principles and develop practical frameworks for balanced LLM deployment in scientific contexts.

5.1 Research Questions

The proposed study is structured around three core research questions that address different aspects of the verification challenge:

RQ1: How do verification principles derived from Logical Positivism both enable and constrain automated scientific discovery by LLMs?

RQ2: What specific verification traps (as identified above) most significantly impact LLMs' scientific discovery capabilities?

RQ3: How can we develop a balanced framework that ensures reliability while preserving LLMs' capacity for creative scientific thinking?

5.2 Methodology

The research will employ a multi-method approach across three distinct phases over an eight-week timeline:

| Phase | Timeline | Activities | Tentative Outcome |

|---|---|---|---|

| Phase 1: Theoretical Analysis | Weeks 1–2 |

(11) Conduct comprehensive literature review examining: (111) Historical development and critique of Logical Positivism (112) Current verification methods in leading LLM systems (113) Research on LLMs in scientific discovery contexts (12) Develop theoretical mapping between positivist verification principles and current LLM evaluation methods |

(11) Detailed theoretical framework connecting verification principles to LLM functionality (12) Identification of specific verification mechanisms in current LLM systems |

| Phase 2: Empirical Testing | Weeks 3–6 |

(21) Design experimental prompts to test effects of verification constraints on LLM outputs in scientific domains (22) Use computational resources to test on open-source LLMs (Llama 3, Mistral, Falcon) (221) Enables comparative analysis across model architectures (222) Allows deeper inspection of verification mechanisms (23) Evaluate LLM responses under varying conditions: (231) Logical verification with knowledge bases (232) Empirical testability (233) Public/open-source validation (24) Assess for: (241) Scientific accuracy (242) Creativity and novelty (243) Practical utility (244) Explanation diversity |

(21) Empirical evidence demonstrating enabling and constraining effects of verification principles (22) Quantitative and qualitative analysis of verification traps in action (23) Comparative performance data across different verification approaches |

| Phase 3: Framework Development | Weeks 7–8 |

(31) Synthesize empirical and theoretical findings into a practical framework (32) Develop recommendations for LLM design and application in scientific discovery |

(31) "Mind The Trap" framework for balanced verification in scientific LLMs that includes: (311) Guidelines for implementing verification without stifling innovation (312) Strategies for detecting when verification constraints limit discovery (313) Recommendations for prompt engineering to maximize scientific insight |

5.3 Timeline Visualization

| Task | Week 1 | Week 2 | Week 3 | Week 4 | Week 5 | Week 6 | Week 7 | Week 8 |

|---|---|---|---|---|---|---|---|---|

| Literature Review | ● | ● | ||||||

| Initial Conceptual Mapping | ● | |||||||

| Design Experimental Prompts | ● | ● | ||||||

| Test Open Source LLMs | ● | ● | ||||||

| Compare Across Model Architectures | ● | ● | ||||||

| Analyze Experimental Results | ● | |||||||

| Identify Patterns and Implications | ● | ● | ||||||

| Synthesize into Framework | ● | |||||||

| Validate with Experts | ● | |||||||

| Final Report & Recommendations | ● |

5.4 Expected Outcomes and Impact

This research addresses a critical gap in current understanding of how verification principles influence LLM performance in scientific contexts. The proposed "Mind The Trap" framework will provide practical guidance for AI researchers, scientists, and policymakers working to maximize the scientific potential of LLMs while maintaining appropriate quality controls.

The framework's emphasis on balanced verification approaches, informed by historical analysis of Logical Positivism's limitations, represents a novel contribution to the field of AI safety and scientific methodology. By identifying specific verification traps and providing strategies for avoiding them, this research enables more effective deployment of LLMs in scientific discovery contexts.

Collaboration Opportunity: If you are interested in working on this research project or would like to discuss potential collaborations in verification principles, or LLM evaluation methodologies, feel free to reach out.

References

[1] A.J. Ayer. Language, Truth and Logic. Victor Gollancz Ltd, London, 1936.

[2] C.G. Hempel. Problems and changes in the empiricist criterion of meaning. Revue Internationale de Philosophie, 41(11):41–63, 1950.

[3] O. Hanfling. Logical Positivism. Columbia University Press, New York, 2003.

[4] E.M. Bender, T. Gebru, A. McMillan-Major, and S. Shmitchell. On the dangers of stochastic parrots: Can language models be too big? In Proceedings of the 2021 ACM Conference on Fairness, Accountability, and Transparency, pages 610–623. ACM, 2021.

[5] A. Dafoe. AI governance: A research agenda. Governance of AI Program, Future of Humanity Institute, University of Oxford, 2018.